Benchmarking CDC Tools: Supermetal vs Debezium vs Flink CDC

Classic "Big Data" stack vs Rust & Arrow.

If you’ve been following my writing recently, you likely know I’m very bullish on the Rust & Apache Arrow data stack.

So when I first learned about Supermetal (almost 2 years ago), I got really excited! Finally, some innovation in the Change Data Capture (CDC) space.

Recently, Supermetal announced support for Kafka sinks, making it a competitor to open-source tools like Debezium and Flink CDC.

I worked with the Supermetal team to run a series of independent benchmarks. This post summarizes my findings.

DISCLOSURE: this work was sponsored by Supermetal. All benchmarks were executed myself in my AWS account. All numbers and findings are shared as is.

About CDC Tools

Supermetal

Supermetal is a new CDC tool implemented using Rust and Apache Arrow. It’s very easy to use: it can be deployed as a single binary. Get the trial version here (includes 1000 hours of free sync).

It scales well vertically (by leveraging available CPU cores). It supports many popular databases and data warehouses. It doesn’t rely on Kafka or any kind of orchestrator: data can be delivered directly from a source to a sink (with optional object storage buffering). You can check its architecture here.

Supermetal supports both live (e.g. reading from a replication slot) and snapshotting modes. Snapshots are always parallelizable.

Supermetal can be configured using the built-in UI. REST API is also available. Finally, a JSON config file in the same format as the API can be used, which I chose to deploy (a better fit for containerized workloads). The config file just describes sources and sinks.

Debezium

Debezium is likely the most popular CDC tool in the world. It’s implemented in Java and typically deployed as a connector in a Kafka Connect cluster. This means it relies on Kafka: CDC data is first ingested into a set of Kafka topics, and then can be delivered to sinks via another connector.

It supports pretty much all relational databases and some non-relational ones.

Debezium supports both live and snapshotting modes as well. An important architectural detail: Debezium connectors (at least the most popular ones, such as MySQL and Postgres) can only be deployed as single-task connectors in the Kafka Connect cluster. Snapshotting can be parallelized by increasing the number of snapshot threads (a relatively new feature).

Debezium connector was deployed with a simple, flat config file.

Flink CDC

Flink CDC originally started as a collection of Flink CDC sources; nowadays, it’s a fully-fledged data integration framework. It’s also implemented in Java using Flink as the engine.

Flink CDC supports both live and snapshotting modes as well. For live mode, it mostly relies on Debezium, since Debezium can be deployed in embedded mode, which doesn’t require a Kafka Connect cluster. For snapshotting, Flink CDC uses a custom implementation that heavily relies on the Flink Source API. Most notably, this is the only of the three implementations that allows horizontal scaling of the snapshotting stage: input chunks (a range of a Postgres table, for example) can be processed in parallel across different TaskManager nodes.

In the case of the Flink CDC framework, you can use YAML-based declarative pipelines, but since I used it as a source connector, I needed to implement a pipeline programmatically.

Test Setup

As you can guess, I had a pretty trivial (and common) goal: replicate data from Postgres to Kafka.

I used the TPC-H dataset with a scale factor (SF) of 50. If you’re not familiar with it, it consists of 8 tables of different sizes. With SF=50, the largest table (lineitem) has 300M rows, the second-largest (orders) has 75M rows, and so forth.

On the infra side, I had:

AWS RDS Aurora Postgres 16, 48 ACUs (increased to 96 later).

AWS MSK with 3 express.m7g.xlarge brokers.

AWS EKS 1.34 using m8i.xlarge nodes (4 CPU cores, 16 GB RAM).

All workloads (Supermetal agent, Kafka Connect node, Flink TaskManager) used a single node pretty much exclusively (configured to request 3.5 CPU cores and 13 GB RAM). Flink TaskManager used 4 task slots.

Regarding versions:

Latest Supermetal build (provided by the Supermetal team as a Docker image).

Flink CDC 3.5.0 with Flink 1.20 deployed using Flink Kubernetes Operator 1.13.

Debezium 3.4.1.Final with Kafka Connect 4.1.1 deployed using Strimzi Operator 0.50.0.

Generated Data

All three tools generated Kafka topics with JSON records. By default, Supermetal uses Debezium envelope schema, and I was able to confirm that it’s actually identical to what Debezium emits, not just payload fields, but message keys and headers too.

Flink CDC provides a standard JsonDebeziumDeserializationSchema for obtaining Debezium records as JSON, but you need to implement a Kafka serializer yourself. The serializer I implemented produced the same Kafka message payloads, but I skipped the message keys and headers, which likely somewhat affected the rates you see below.

Finally, I spot-checked data across topics and didn't observe any data loss.

Snapshotting Mode

I primarily wanted to test snapshotting performance; I expected to see the most drastic differences there. I also tested live mode, but skipped Flink CDC for it (since it essentially wraps Debezium, so performance would be roughly the same or lower).

Ok, let’s explore the benchmarks now!

Supermetal

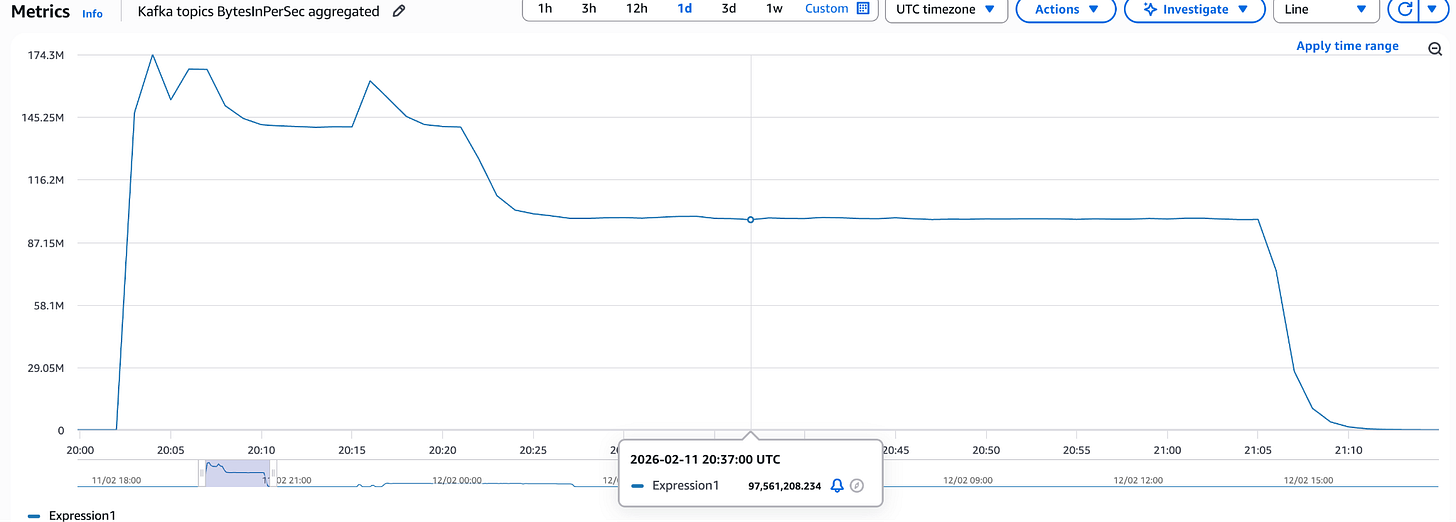

The baseline run with the default configuration looked like this:

It finished in 72 minutes with 174 MB/s peak throughput and 105 MB/s average throughput.

Supermetal team recommended to also test with:

Disabled intra-table chunking (parallel_snapshots_enabled = false). For Kafka sinks, this improves throughput since Kafka partitions are the bottleneck, not table parallelism. This is typically not needed for sinks like data warehouses.

Producer pool size equal to the number of input tables (8).

Another run with the updated configuration finished in 60 minutes with 275 MB/s peak throughput and 123 MB/s average throughput. Spoiler: this is the best result I saw!

Flink CDC

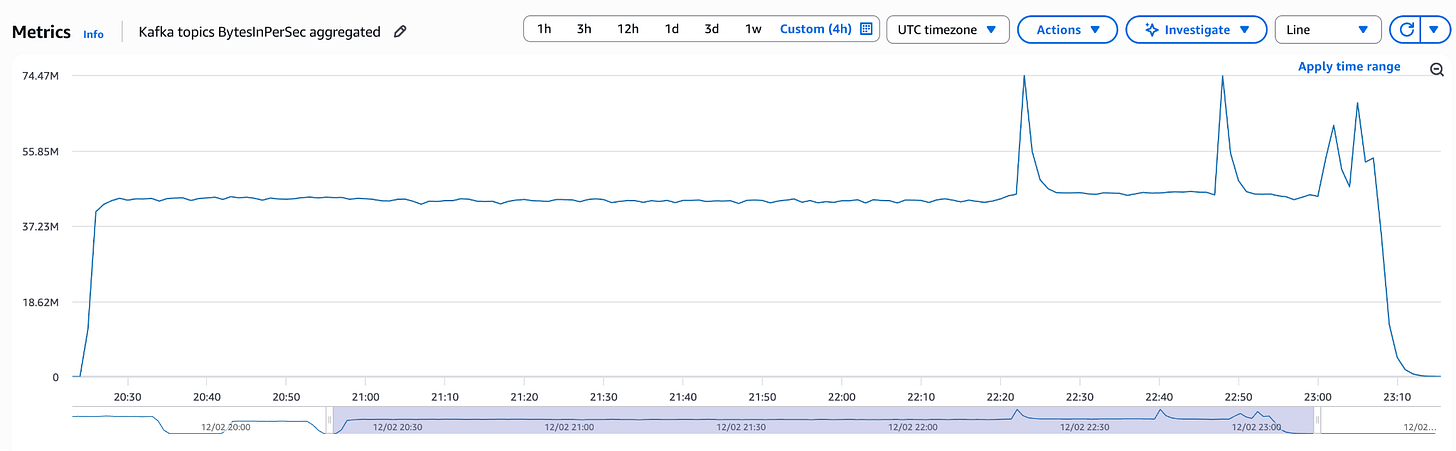

The baseline run with the default configuration looked like this:

It finished in 210 minutes with 29 MB/s peak throughput and 22 MB/s average throughput. Pretty big difference compared to Supermetal, but it’s important to establish a baseline. Can we improve it?

First obvious optimization to try was tweaking the Kafka Producer configuration: using linger.ms of 100 and batch.size of 1000000. Actually, these are the values that are used by Supermetal by default, so it’s only fair to set the same here. But this change didn’t show any performance gains. I may have an explanation below.

Another thing I decided to try was scaling the job horizontally. I added three more TaskManagers, which increased total parallelism from 4 to 16. This led to almost linear improvement in throughput: I was able to consistently achieve 84 MB/s after ramping up. But, of course, it also means additional infrastructure.

Another optimization I tried separately was increasing fetch size (how many rows a connector polls at once) and chunk/split size (how many rows are logically grouped together for processing). I increased the fetch size to 5000 (from the default 1024) and chunk/split size to 50000 (from the default 8096). This led to 54 MB/s.

Debezium

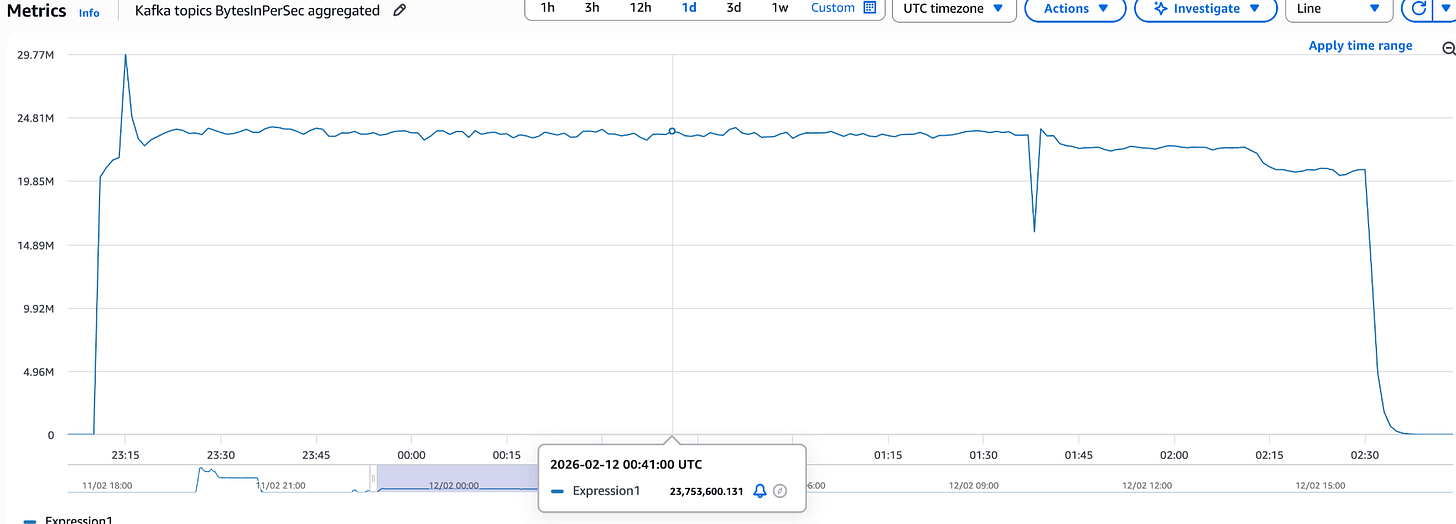

The baseline run with the default configuration looked like this:

It finished in 170 minutes with 74 MB/s peak throughput and 43 MB/s average throughput. Also, much slower than Supermetal and comparable to Flink CDC.

I tried the same obvious optimization with tweaking the Kafka Producer configuration: using linger.ms of 100 and batch.size of 1000000. This time, it was beneficial: I was able to nearly double the throughput and consistently achieve 100 MB/s after ramping up

Another optimization I tried separately was increasing the number of snapshot threads, from 1 to 4. This also gave a nice boost, reaching 70 MB/s. Increasing further to 8 threads didn’t help though.

I also tried combining the tweaked Kafka Producer config and 4 snapshot threads, but it made things worse than the baseline. Too much contention, I guess.

Live Mode

The data generator I used was able to emit the same TPC-H data consistently at a given rate. It only wrote data to the top two tables: lineitem and orders.

Debezium

With the default config, Debezium could keep up with 15k ops/s. Things didn’t look good at 30k ops/s, the replication lag started growing.

I applied the same optimization and increased the producer’s batch.size and linger.ms. That made it possible to sustain 30k ops/s. The replication slot lag remained around 800 MB.

Unfortunately, I couldn’t get my data generator to write more than ~35k ops/s… Even after increasing the Postgres database to 96 ACUs. This is likely possible with the different Postgres setup, but I was happy with the numbers at that point.

I think live Debezium connector throughput could be improved further by increasing max.batch.size and max.queue.size config options, but I couldn’t test it. Also, it’s likely possible to lower the replication slot lag by reducing the flush time on the Kafka Connect side.

Supermetal

Supermetal was able to keep up with 35k ops/s using the default config. The replication slot lag stayed below 100 MB.

When it comes to live data, throughput is just one consideration. Latency can be as important. I admit it wasn’t the goal of my benchmark, but just looking at the replication lag, it seems very promising. Maybe we’ll have a part 2?

Other Notes

Supermetal used the CPU more efficiently (typically ~50% of the allocated 4 cores), while Debezium and Flink CDC mostly stayed around 25%.

Supermetal also used less memory (2GB), whereas Debezium and Flink CDC consumed much more (8GB - 10GB).

Analysis & Conclusion

Parallelization

If you compare the three graphs again, you’ll notice that Debezium and Flink CDC demonstrated the same behaviour: achieving a certain throughput level and roughly staying at that level during the test. This was true regardless of the optimizations I applied (they just affected the rate).

Supermetal behaved differently: you can see a big jump at the beginning, where it processes most of the tables in parallel, and then the throughput decreases as only the biggest table (lineitem) remains.

I think it means Supermetal can parallelize processing more efficiently, so it can likely achieve better throughput with larger tables.

Why is Flink CDC the Slowest?

Flink CDC seemed to be the slowest option, but why is that? It has a sophisticated snapshotting mechanism, but it still appears to be slower than Debezium.

Flink was designed to run at a large scale. There is definitely some overhead that affects workloads with the smaller scale - a distributed system almost always pays some coordination cost compared to a single-node architecture (this is why systems like DuckDB are almost always faster than Spark on small data, for example).

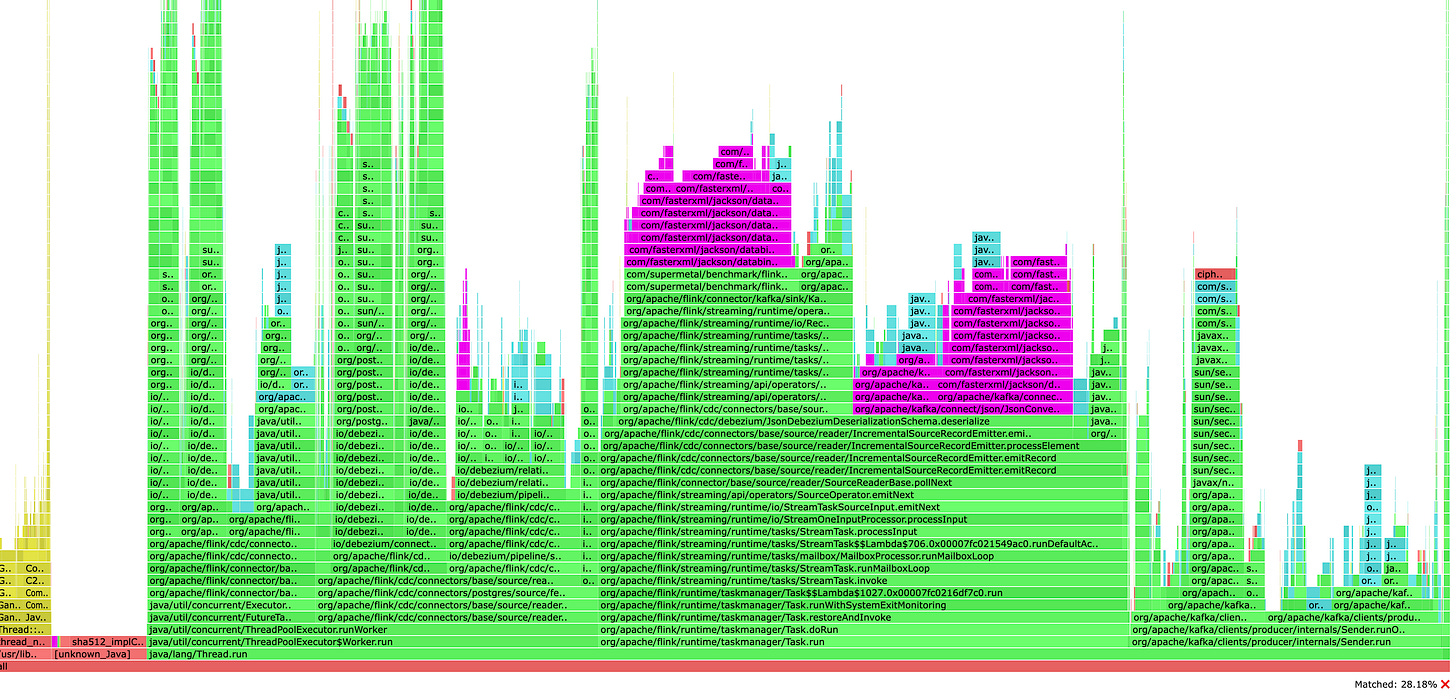

But I think, at least in part, it can be attributed to the amount of JSON serialization/deserialization it performs. Here’s the CPU flamegraph I took:

Purple color highlights JSON serialization/deserialization, which takes almost a third of the CPU. In general, when it comes to stateless streaming systems, data serialization/deserialization is the slowest part. This is especially evident here, since the data goes through a few serialization/deserialization roundtrips (first in Debezium, then in Flink). Jackson, the heavily used Java JSON library, is not the fastest out there. If you’re dealing with JSON in a high-performance Java system, check fastjson2 and simdjson-java.

I Expect Even Bigger Difference With Transformations

Supermetal leverages a highly optimized columnar Apache Arrow format, which will likely deliver even better performance when a transformation (such as a filter or projection) is involved, thanks to low-level compute kernels.

Supermetal doesn't support transformations yet, but it's on the roadmap. I'd love to re-run the benchmark when it ships. I believe that columnar data layout will make a huge difference!

Summary

Supermetal clearly delivers the best performance. Debezium and Flink CDC, once optimized, can get close. At the same time, Supermetal shows much better usage of allocated resources (better CPU and memory utilization).

If you still think that Rewrite Bigdata in Rust is just hype, maybe reconsider. I think we’ll see more tools purposely designed to run very efficiently on modern hardware.

If you need to optimize Debezium or Flink CDC pipelines, look into tuning the Kafka Producer configuration and consider the best way to parallelize snapshots.

It’s worth highlighting that Flink CDC has a working horizontally scalable snapshotting mechanism. If you’re willing to throw more money compute at the problem, you can likely achieve a similar (or higher) level of performance as Supermetal.

Horizontal scalability may seem like a must-have, but ultimately, your database will eventually become the bottleneck. Also, you can go really far by logically sharding your CDC workloads by table: I could’ve deployed 8 supermetal agents or 8 debezium tasks, one for each table. Definitely more painful to manage at scale.

I also have to mention the difference in developer experience: having a single binary and not relying on operators, clusters, and much configuration / glue code felt amazing!