Current 2024

Shifting left to make it right.

Please pledge your support if you find this newsletter useful. I’m not planning to introduce paid-only posts anytime soon, but I’d appreciate some support from the readers. Thank you!

Current is one of the main events in the data streaming space. Current 2024 happened last week in Austin, Texas.

Keynotes

The keynotes were somewhat underwhelming. There were no huge announcements or new product launches. Even Jay’s “one more thing” announcement regarding the WarpStream acquisition already wasn’t big news at the time.

It was great to hear that Confluent’s Tableflow will be in the open preview very shortly. Also, many Flink-related updates from them:

Python and Java Table API support is coming.

Flink private networking and external schema registry support.

Flink available in the Confluent Platform.

I think it’s clear Flink users require much more than a Flink SQL editor.

But probably the main theme of the first day keynote was “AI”1. I feel like every vendor must mention “AI” at any serious tech event nowadays: you want to show your customers and your investors that you can either leverage or assist with building the new wave of “AI” products.

However, in Confluent’s case (or any streaming data infra company, for that matter), I feel like the value is real: many user-facing products need near real-time data, which is hard to implement without streaming. I’m not convinced that a Flink UDF calling OpenAI endpoint is all we need (see more on this below).

The second day keynote was focused on developers. Kafka Docker images, DLQ for Kafka Streams, PATCH endpoints for Kafka Connect, and, of course, the VS Code extension were all very welcomed.

When Tim Berglund appeared on stage, I felt like the world became a little bit brighter. I missed him2! Btw, Tim interviewed me on the Streaming Audio podcast a few years ago.

“Shifting left to make it right”

“Shifting left” was mentioned during the keynotes five times (I counted). I also heard it in the hallways a lot. In case you don’t know, in the data platform context, shifting left means working more closely with operational / application development teams. For example, it means shared ownership over data products or data pipelines with the goal of stopping data artifacts from being treated as a second-class citizen. Data Mesh architecture is one of the ways to implement this principle.

It’s quite refreshing to hear this not just from consultants or vendors but large enterprises as well. I suspect that execs have finally started to understand the importance of high quality data. If you want to build actually useful user-facing “AI” products, you can’t do it without clean and fresh datasets. And yet, most of the enterprises still struggle with basic BI projects…

I really hope to see some change in the industry. I spent some time in my career as a data engineer building data pipelines, and sometimes, working around application teams was a ridiculous process costing the company tens of thousands of dollars. For example, one time at Shopify, we implemented a streaming join between nine (!) tables because the application team couldn’t emit a single domain event containing all the relevant information3.

WarpStream Acquisition

The acquisition was announced a week before, but I’d love to share a few things in this post since we heard its CEO, Richie Artoul, during the first day keynote.

First of all, as you may know, I’m a big fan of WarpStream.

I think their architecture is truly novel and truly cloud-native, and it supports a few very interesting features (check the post above).

Somehow, they were insanely productive and, with just ten-ish people, built a great product that was able to compete with major vendors. I was so excited about their future!

However, they chose an exit. Confluent has made a great move, eliminating a competitor and getting a stellar team.

I know that the WarpStream team still has a lot of autonomy; Confluent also chose to keep the brand (it’s now “WarpStream by Confluent”), so I’m hopeful they’ll be able to innovate at the same pace or even faster (with more resources now).

Some people pointed out that WarpStream uses a different stack (Golang instead of JVM), but, in my opinion, nowadays, large companies are totally fine at integrating at the Kubernetes level. It’s not that important what’s running in a given container.

I’m slightly more concerned about additional services WarpStream is building, like the Schema Registry and the Iceberg support. They’re essentially duplicating Confluent’s existing (or incoming) products. I’d be very curious to see how they resolve this. For example, I was looking forward to using fully managed WarpStream’s Schema Registry; running the open-source version of the Confluent Schema Registry is not fun.

Redpanda Announcements

Similarly, Redpanda hasn’t announced anything at the event, but they did a few major announcements a week before (trying to steal the thunder), so I think it’s important to cover their updates as well.

First of all, they announced Redpanda One: a single, multi-modal engine. It includes Cloud Topics, which is Redpanda’s answer to WarpStream.

I must say, it’s quite impressive. An ability to choose the underlying topic storage (high-latency object storage vs regular on-disk vs ultra low-latency) on a per-topic basis is very powerful! It does mean a more complicated design, though.

The Iceberg support is finally coming to Redpanda. They were actually the first vendor to announce this capability (more than a year ago). I’ve seen the demo, and it looks slick!

Redpanda also made “AI” capabilities its focus lately. But instead of just making it easier to call OpenAI API, they actually integrated Redpanda Connect with LLMs. This fits their Sovereign AI messaging quite well.

Overall, it feels like Redpanda is able to keep up with Confluent and, in some cases, innovate and advance further. I really hope that they’ll succeed, because we need more competion in this space.

Talks

Here’s a selection of great talks I had a chance to attend:

Consistency and Streaming: Why You Care Should About It. If you’ve been following Materialize, you won’t learn anything new, but if the topic of data consistency in the streaming context is new to you, this is a great explainer. My advice to Materialize is to cover the use case of using payload-level timestamp fields as virtual timestamps when consuming Kafka topics - this is what 80% of people care about.

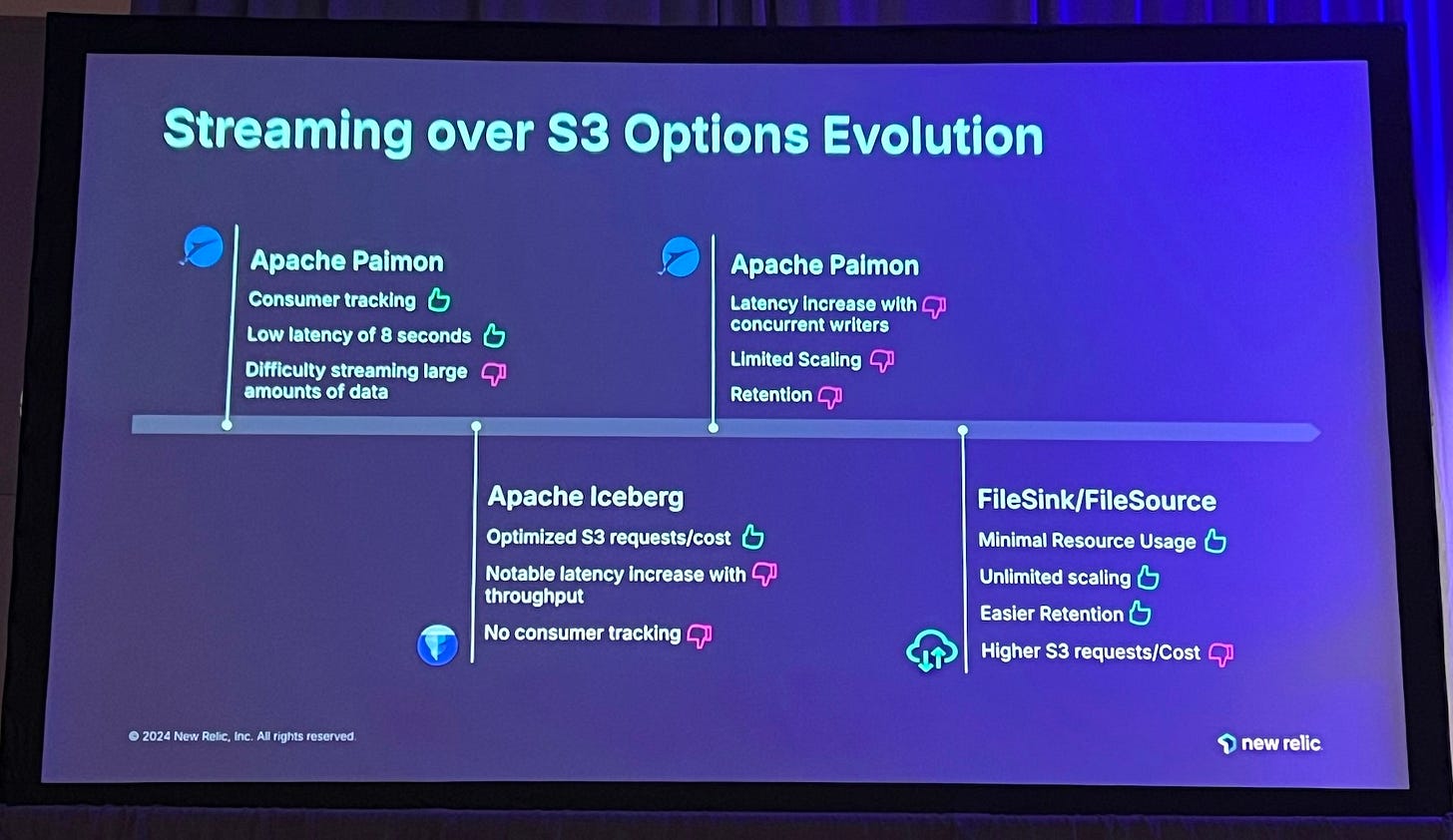

Scaling Data Ingestion: Overcoming Challenges with Cell Architecture. A pragmatic way to scale stream-processing via object storage. Showing a solid example of the cell architecture in the wild. And some real-world comparisons between Iceberg, Paimon and raw files.

Enabling Flink's Cloud-Native Future: Introducing Disaggregated State in Flink 2.0. Flink 2.0 will be getting a state backend that natively supports object storage. This comes with a lot of challenges. It seems like Async APIs will help with reducing the impact of increased latency. The current implementation performs at 40% of the baseline level (disk-based), which is considered pretty good. We’ll see what the final number will be.

Events at the (API) Horizon: How to synthesize domain events & changes from your HTTP/REST APIs. Very interesting talk comparing eventing and REST APIs. A demo of Eventception, which is worth checking if you use a service proxy like Enovy or Kong and care about events.

Flinking Enrichment: Shouldn't This Be Easier? Legendary David Anderson talking about one of the most challenging problems in streaming: data enrichment. Lots of focus on SQL and join semantics, with many great learnings. It’d be great to see more low level examples in the future.

Bridging the Kafka/Iceberg Divide. How do you represent a Kafka topic in the Avro format as an Iceberg table? What if it has incompatible versions? Folks from Confluent sharing their learnings from building Tableflow.

Building a Scalable Flink Platform: A Tale of 15,000 Jobs at Netflix. Nice talk from Netflix showing the evolution of their Flink managed platform that works at scale.

Also in my watchlist:

Speed Wins: The Basics of How to Push More Queries Through Each CPU Core.

The SQL Ecosystem: Powering the Instant World with 40-Year-Old Legacy?

Streamlining Entry into Streaming Analytics with JupyterHub and Apache Flink.

Towards a Self-Tuning Flink Runtime: A Year in Flink’s Scheduling.

Change Data Capture & Kafka: How Slack Transitioned to CDC with Debezium & Kafka Connect.

Finally, please check the Streamlining History: ClickHouse & Flink's Fast-Track for Data Backfills talk from my co-worker Rafael Aguiar! In the world where Iceberg is supported natively by Kafka brokers, we’ll be using something like Flink’s Hybrid Source to merge the two. Rafael explains how to do that but with ClickHouse.

Cool Vendors

I had a chance to talk to a few really cool companies I hadn’t heard of before:

DBOS: a new durable execution engine. It reminds me of Restate. They made some interesting design choices, like relying on Postgres for journaling or heavily using annotations/decorators in their SDKs, but they’re quite comparable in terms of functionality. Overall, the product feels somewhat simpler, probably in a good way.

thatDot, the company behind Quine:

Quine is a streaming graph interpreter; a server-side program that consumes data, builds it into a stateful graph structure, and runs live computation on that graph to answer questions or compute results. Those results stream out in real-time.

Let me expand on this.

I haven’t heard about this approach for stateful stream-processing before. It seems like they try to leverage some properties you can get by representing relations as graphs.

They do make some very strong claims. They say that they can support “infinite joins” because they’re not limited by time windowing “like every other event processor”, which sounds a bit naive.

First of all, you actually don’t need windowing to implement stateful stream-processing with Apache Flink, for example. Windowing is very helpful at limiting the amount of state to maintain, but you might be ok with the additional cost and complexity. In the case of that large streaming pipeline with many joins we implemented at Shopify (which I described above), we didn’t use windowing for most of the joins. We were able to ingest all of Shopify’s sales/order data (via CDC) and keep it in state (which was ~13 TB at the time). The savepointing was somewhat challenging, but it was still doable.

Even though the graphs can help you with representing the data better, you’re still limited by basic resources like memory. And when you start spilling data to disk, you might not be faster than Flink with RocksDB.

Anyway, I’d love for someone to do a proper benchmark comparing Quine and a non-windowed Flink pipeline. Their approach looks very, very interesting.

“That Is Not a Real SQL”

I overheard one of the developer advocates from a well-known company say something like this: “Well, Flink SQL is not a real SQL, you know, like in databases”. This was so frustrating to hear that I decided to write my response here. And by the way, I’m not even a Flink SQL fan (it has its issues).

I acknowledge that modern databases can do amazing things. For example, DuckDB and Umbra/CedarDB really challenge what we think is possible. But there is nothing magical about them. In the end, every database just queries files in a for loop (more or less 🙂). Pretty much every database does things like SQL to AST conversion, building logical and physical plans, and optimizing them.

Flink SQL is exactly the same. It relies on Apache Calcite quite a bit, which is also used by many other data frameworks and query engines. Flink SQL goes through the same stages like SQL to AST conversion, building logical and physical plans, and optimizing them. It just happens to usually work with batches of data received from data streams like Kafka topics, not files.

The world of databases and streaming systems is converging. Perhaps it’s advanced more than you realize.

I reject using “AI” without the quotes in the context of modern “AI” systems like ChatGPT and LLMs. Humanity hasn’t invented actual Artificial Intelligence yet. What we have is Machine Learning.

Tim left Confluent to join StarTree as a VP of DevRel. He returned to Confluent a few months ago in the same capacity.

To be honest, this wasn’t completely that team’s fault. At the time, Shopify didn’t have a reliable way of sending domain events (e.g. with the Outbox pattern), and we couldn’t tolerate any data loss.